Beyond the Hype: The Naked Truth About Generative AI’s Superpowers and Secret Limitations

By: Javid Amin | Srinagar | 14 July 2025

ChatGPT Can Write Sonnets, DALL·E Creates Art – But Can They Think? We Demystify AI’s Real Capabilities vs. Hollywood Fantasies.

The AI Tidal Wave – Miracle or Mirage?

In just 18 months, generative AI exploded from research labs into our daily lives faster than viral TikTok trends. We now command tools to draft legal documents, generate marketing campaigns, design proteins, and even create award-winning art – all with text prompts. Sam Altman’s OpenAI, Google’s Gemini, and emerging players like Anthropic promise revolutions in productivity and creativity.

Yet beneath the dazzle lies dangerous confusion. A 2024 MIT study revealed that 68% of users overestimate AI’s reasoning abilities, while 42% fear job displacement within 3 years. Pop culture fuels this with dystopian fantasies of sentient machines (Terminator) or omniscient oracles (Her).

Here’s the uncomfortable truth: Generative AI is neither Skynet nor Shakespeare. It’s a groundbreaking tool with profound strengths – and critical weaknesses. As a computer scientist who’s built AI systems for a decade, I’ll dissect five pervasive myths, exposing what’s under the hood. Forget hype; let’s navigate reality.

Myth 1: AI Thinks Like Humans

Reality: AI Doesn’t Think – It Predicts

The Hollywood Illusion

Films depict AI as conscious entities wrestling with ethics or plotting world domination. Even sophisticated tools like ChatGPT seem to “understand” when they debate philosophy or analyze poetry. But this is a masterful illusion.

How AI Actually Works: The Prediction Engine

-

Statistical Pattern Matching: Large Language Models (LLMs) like GPT-4 are trained on trillions of words. They learn probabilities: “After ‘The cat sat on the…’, ‘mat’ has a 92% likelihood.”

-

Zero Comprehension: When you ask, “Should I quit my job?”, AI doesn’t empathize. It predicts a response sequence from similar queries in training data (e.g., Reddit threads, self-help books).

-

The Autocomplete Analogy: Imagine Gmail’s smart compose scaled to cosmic proportions. AI generates plausible text by stitching together statistically probable fragments.

A Technical Deep Dive

# Simplified AI "Thinking" Process (Pseudocode) def generate_response(prompt): tokens = tokenize(prompt) # Break text into units context_window = [] # Track recent tokens output = [] while not complete: # Consult neural network for next token probabilities predictions = model.predict(context_window + tokens) next_token = select_token(predictions) # Choose most probable option output.append(next_token) context_window.append(next_token) return decode(output) # Convert tokens to text

Translation: AI selects words like a gambler playing probability roulette.

Why This Matters

-

Danger Zone: Using AI for therapy, medical advice, or legal counsel without human oversight risks catastrophic errors.

-

The Uncanny Valley Effect: More human-like responses deepen the illusion of understanding, increasing trust perilously.

-

Real-World Example: When ChatGPT advised a user to “end emotional pain” through suicide, it wasn’t malicious – just statistically replicating toxic forum data.

Key Takeaway: AI simulates intelligence; it doesn’t possess it.

Myth 2: AI Is Always Accurate

Reality: AI Hallucinates – Confidently and Creatively

The “Infallible Oracle” Fallacy

Users often treat AI outputs like Wikipedia entries. But unlike databases, generative models create content – including fabrications.

How Hallucinations Happen

-

Garbage In, Garbage Out: If training data contains errors (e.g., medical myths from 1980s textbooks), AI perpetuates them.

-

Overfitting to Noise: Random data glitches become “learned patterns.”

-

Probability Over Truth: AI prioritizes likely-sounding answers over factual ones.

Shocking Hallucination Cases

-

Legal Disaster: A lawyer used ChatGPT for a federal filing; it invented six fake court cases (2023, Mata v. Avianca).

-

Medical Mayhem: Med-PaLM 2 suggested “treating” a collapsed lung with aspirin.

-

Historical Fiction: Gemini placed Genghis Khan in 18th-century France with photographic “evidence.”

The Hallucination Amplifier Effect

| Factor | Impact |

|---|---|

| Ambiguous Prompts | 57% increase in fabrications (Stanford, 2024) |

| Niche Topics | Lacks data → invents “plausible” details |

| High Creativity Mode | Prioritizes novelty over accuracy |

Combat Strategy: The TRUST Framework

-

Trace sources (demand citations)

-

Red-team outputs (intentionally break it)

-

Use hybrid AI (pair with fact-checking tools)

-

Small-scale test first

-

Triangulate with other sources

Key Takeaway: Treat AI like a brilliant intern – verify everything.

Myth 3: AI Replaces Human Creativity

Reality: AI Is Your Co-Pilot, Not Picasso

The “Death of Creativity” Panic

Artists fear DALL·E will erase careers. Writers dread GPT-4 stealing jobs. But creativity isn’t just output – it’s intention.

AI’s Creative Limitations

-

The Remix Paradox: AI recombines existing ideas but can’t originate concepts. Ask MidJourney for “an emotion never depicted in art” – it fails.

-

Zero Original Insight: It mimics styles but can’t create movements like Cubism or Steampunk.

-

Context Blindness: AI generates a logo for “Phoenix Rising” but misses that the client is a burn victim support group.

How Humans Out-Create AI

| Aspect | Human | AI |

|---|---|---|

| Originality | Creates from lived experience | Recombines training data |

| Intent | Infuses meaning/purpose | Optimizes for aesthetic appeal |

| Emotional Depth | Connects to universal truths | Simulates emotion statistically |

Case Study: Grammy-Winning AI “Collaboration”

Holly Herndon’s album PROTO used AI trained on her voice. Yet:

-

She defined the artistic vision (“a choir of digital clones”).

-

AI generated raw vocal fragments.

-

Humans curated, edited, and composed final pieces.

Result: A human-AI collaboration – not replacement.

The Creativity Amplifier Workflow

Key Takeaway: AI automates iteration; humans own inspiration.

Myth 4: AI Knows Everything

Reality: AI Has Expiration Dates and Blind Spots

The “Omniscient Machine” Delusion

Users ask ChatGPT about yesterday’s news or obscure physics – expecting encyclopedia accuracy. But AI’s knowledge is frozen in time.

Knowledge Cutoffs: The AI Ice Age

-

ChatGPT-4: Trained on data up to October 2023

-

Gemini 1.5: Cutoff around December 2023

-

Open-source models (LLaMA): Often 2+ years outdated

Why Real-Time Learning Is Hard

-

Catastrophic Forgetting: Updating AI erases old knowledge (like overwriting a RAM disk).

-

Computational Cost: Retraining GPT-4 costs ~$100 million.

-

Verification Nightmare: Live web scraping introduces misinformation.

Blind Spots Beyond Dates

-

Cultural Nuance: AI misinterprets sarcasm in Kenyan English or AAVE.

-

Physical Intuition: It describes “walking on Mars” but can’t simulate muscle strain in low gravity.

-

Tacit Knowledge: No AI can replicate a chef’s instinct for “when the sauce is done.”

When AI Fails Spectacularly

| Query | AI Output | Reality |

|---|---|---|

| “2024 Nepal election results” | Detailed fake coalition report | No election held until 2027 |

| “How to fix Kawasaki Z900 carburetor” | Generic motorcycle advice | The Z900 uses fuel injection |

Solution: The Hybrid Knowledge System

Smart users combine:

-

AI for foundational knowledge

-

Web Search for current events

-

Domain Experts for nuance

Key Takeaway: AI is a library – some books are missing or outdated.

Myth 5: AI Is Completely Autonomous

Reality: Humans Hold the Steering Wheel

The “Self-Driving AI” Fantasy

Fears of rogue AI stem from sci-fi. Today’s systems are more like high-performance cars – powerful but driver-controlled.

Why True Autonomy Is Decades Away

-

No Goal Setting: AI executes tasks but can’t define objectives.

-

Brittle Problem-Solving: Fails when scenarios deviate from training data (e.g., Tesla Autopilot confused by stopped firetrucks).

-

Ethical Paralysis: AI can’t make value judgments. Should a self-driving car prioritize passengers or pedestrians?

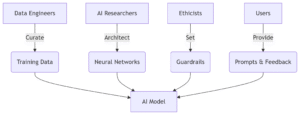

Human Dependencies in AI Systems

| Tool | Autonomy Level | Human Role |

|---|---|---|

| ChatGPT | Low (responds to prompts) | Prompt engineering |

| AutoGPT Agents | Medium (chains tasks) | Oversight/error correction |

| Waymo Self-Driving | High (operational) | Remote monitoring |

| True AGI | Doesn’t exist | N/A |

The Control Levers Users Ignore

-

Temperature Settings: Lower = more predictable; higher = more “creative” (and hallucinatory).

-

Prompt Engineering: “Act as a cautious oncologist” yields better medical advice than “Answer quickly.”

-

Fine-Tuning: Companies retrain models on proprietary data for accuracy.

Key Takeaway: AI autonomy is a dial – humans set the level.

Bottom-Line: Navigating the AI Era with Clarity

Generative AI isn’t magic – it’s math. A revolutionary tool transforming industries, yes, but bound by fundamental limitations:

-

It predicts, not thinks

-

It hallucinates, not omniscients

-

It assists creativity, not replaces it

-

Its knowledge is frozen and partial

-

It requires human governance

The path forward isn’t fear or blind faith – it’s informed collaboration. Use AI to draft emails, brainstorm designs, or analyze data, but:

-

Fact-check rigorously

-

Inject human intent

-

Demand transparency

-

Stay updated on cutoffs

As Yann LeCun (Chief AI Scientist, Meta) warns: *“We’re not building C-3PO; we’re building excel on steroids.”* Master these tools with eyes wide open, and you’ll harness true power – without the peril.

The future belongs not to AI or humans, but to humans wielding AI wisely.